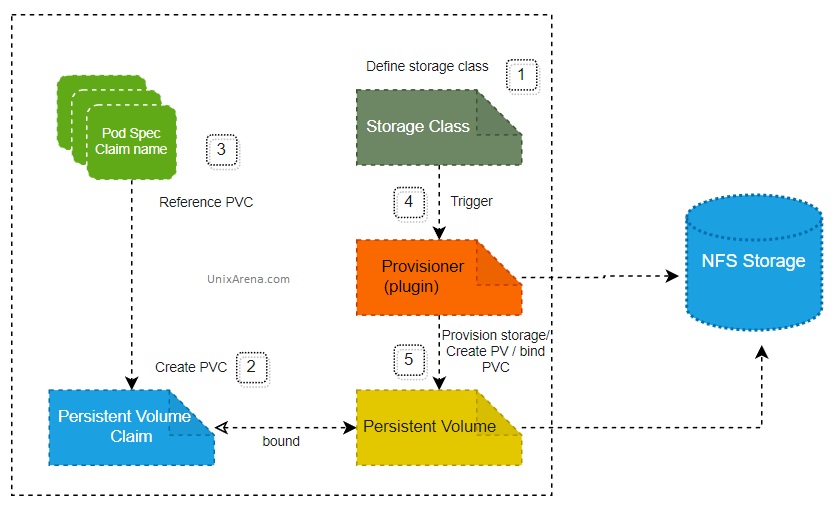

Dynamic volume provisioning helps to reduce manual efforts by provisioning volumes dynamically. If you do not have dynamic volume provisioning, admins might end up creating the volumes manually and create PersistentVolume to represent in Kubernetes for storage. To enable dynamic storage provisioning, we need to create a storage class with the provisioner. The provisioner is a volume plugin that is responsible to communicate with backend storage to create the required volumes. Kubernetes ships with a bunch of volume provisioners. You can also integrate third-party storage plugins as well.

Provisioner examples:

Here are some of the provisioners mostly used in the kubernetes cluster, The provisioner flagged with internal are shipped with kubernetes. The NFS provisioner doesn’t ship with the kubernetes cluster and we need to use an external provisioner to create the StorageClass for NFS volumes.

- AWSElasticBlockStore – Internal

- AzureFile – Internal

- AzureDisk – Internal

- Cinder – Internal

- GCEPersistentDisk – Internal

- Glusterfs – Internal

- NFS – external

- VsphereVolume – Internal

- Local – external

This article will walk you through how to configure nfs external provisioner and demonstrate the dynamic storage provisioning using the NFS backend StorageClass.

Prerequisites:

- Kubernetes Cluster

- NFS server to share the NFS shares

Configure the NFS server on Ubuntu:

Setting up the NFS server here is only for LAB purposes only. In the enterprise environment, you will get the NFS shares from enterprise storages like NetApp & EMC.

1. Install the “nfs-kernel-server” package on the host which will be acting as the NFS server.

root@loadbalancer1:~# apt install nfs-kernel-server Reading package lists... Done Building dependency tree Reading state information... Done The following additional packages will be installed: keyutils libnfsidmap2 libtirpc-common libtirpc3 nfs-common rpcbind Suggested packages: watchdog The following NEW packages will be installed: keyutils libnfsidmap2 libtirpc-common libtirpc3 nfs-common nfs-kernel-server rpcbind 0 upgraded, 7 newly installed, 0 to remove and 22 not upgraded. Need to get 504 kB of archives. After this operation, 1,938 kB of additional disk space will be used. Do you want to continue? [Y/n] y

2. Create the directory which needs to be shared with the kubernetes cluster. Set the ownership and permission.

root@loadbalancer1:~# mkdir -p /mnt/k8s_nfs_storage root@loadbalancer1:~# chown -R nobody:nogroup /mnt/k8s_nfs_storage root@loadbalancer1:~# chmod 777 /mnt/k8s_nfs_storage

3. Update the /etc/exports file according to your environment. In my LAB setup, both the NFS server and K8s nodes are in the 172.16.16.0/24 network.

/mnt/k8s_nfs_storage 172.16.16.0/24(rw,sync,no_subtree_check)

4. Export the share using the following command.

root@loadbalancer1:~# exportfs -a root@loadbalancer1:~#

5. Restart the NFS server service.

root@loadbalancer1:~# systemctl restart nfs-kernel-server

In my LAB environment, the firewall has been disabled. Otherwise, you need to explicitly allow the traffic using rules.

root@loadbalancer1:~# ufw status Status: inactive root@loadbalancer1:~#

Test the NFS client – K8s Masters & worker:

Once you have the NFS server ready, you can configure the NFS client and test the filesystem access.

1. Install the NFS client package on all the K8s master & worker nodes.

root@kmaster1:~# kubectl get nodes NAME STATUS ROLES AGE VERSION kmaster1 Ready control-plane,master 45h v1.22.0 kmaster2 Ready control-plane,master 45h v1.22.0 kmaster3 Ready control-plane,master 44h v1.22.0 kworker1 Ready <none> 44h v1.22.0 root@kmaster1:~#

2. On each node, install the “nfs-common” package.

root@kmaster1:~# apt install nfs-common

3. Test the NFS server share access by mounting it. If the mount is successful on all the k8s masters and workers, we are good to deploy NFS Subdirectory External Provisioner

root@kmaster1:~# mount -t nfs 172.16.16.51:/mnt/k8s_nfs_storage /mnt

NFS Subdirectory External Provisioner:

NFS subdirectory external provisioner helps to create the volume from NFS shares that already exist and all the workers and master have access to it.

1. Add the helm repo for the nfs subdirectory external provisioner.

root@kmaster1:~# helm repo add nfs-subdir-external-provisioner https://kubernetes-sigs.github.io/nfs-subdir-external-provisioner/ "nfs-subdir-external-provisioner" has been added to your repositories root@kmaster1:~#

2. Create a new namespace and install the “nfs subdirectory external provisioner” helm chart.

root@kmaster1:~# kubectl create ns nfsstorage namespace/nfsstorage created root@kmaster1:~# helm install nfs-subdir-external-provisioner nfs-subdir-external-provisioner/nfs-subdir-external-provisioner --set nfs.server=172.16.16.51 --set nfs.path=/mnt/k8s_nfs_storage -n nfsstorage NAME: nfs-subdir-external-provisioner LAST DEPLOYED: Mon Oct 17 15:11:20 2022 NAMESPACE: nfsstorage STATUS: deployed REVISION: 1 TEST SUITE: None root@kmaster1:~#

3. Check the deployment status.

root@kmaster1:~# kubectl get all -n nfsstorage NAME READY STATUS RESTARTS AGE pod/nfs-subdir-external-provisioner-7b795f6785-266pb 1/1 Running 0 119s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/nfs-subdir-external-provisioner 1/1 1 1 119s NAME DESIRED CURRENT READY AGE replicaset.apps/nfs-subdir-external-provisioner-7b795f6785 1 1 1 119s root@kmaster1:~#

4. List the newly created StorageClass.

root@kmaster1:~# kubectl get storageclass

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-client cluster.local/nfs-subdir-external-provisioner Delete Immediate true 8m54s

root@kmaster1:~# kubectl get storageclass nfs-client -o yaml

allowVolumeExpansion: true

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

annotations:

meta.helm.sh/release-name: nfs-subdir-external-provisioner

meta.helm.sh/release-namespace: nfsstorage

creationTimestamp: "2022-10-17T15:11:23Z"

labels:

app: nfs-subdir-external-provisioner

app.kubernetes.io/managed-by: Helm

chart: nfs-subdir-external-provisioner-4.0.17

heritage: Helm

release: nfs-subdir-external-provisioner

name: nfs-client

resourceVersion: "75613"

uid: f87b150c-1649-4f85-ba91-0a1430c8fd9e

parameters:

archiveOnDelete: "true"

provisioner: cluster.local/nfs-subdir-external-provisioner

reclaimPolicy: Delete

volumeBindingMode: Immediate

root@kmaster1:~#

Test NFS Subdirectory External Provisioner:

1. Create a manifest to create the PersistentVolumeClaim.

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: test-claim

spec:

storageClassName: nfs-client

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Mi

2. Create PVC and list the PVC to check the status. If the status is in “Bound” , dynamic storage provisioning works as expected.

root@kmaster1:~# kubectl create -f pvc.yaml persistentvolumeclaim/test-claim created root@kmaster1:~# kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE test-claim Bound pvc-793bde66-c596-4405-9f6d-033d0f17baf3 1Mi RWX nfs-client 8s root@kmaster1:~#

3. We can also check the backend volume for the above PVC.

root@kmaster1:~# kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE pvc-793bde66-c596-4405-9f6d-033d0f17baf3 1Mi RWX Delete Bound default/test-claim nfs-client 61s root@kmaster1:~#

We have successfully deployed NFS Subdirectory External Provisioner to use the NFS shares in kuberentes cluster for persistent storage. In the first section, we have setup the NFS server and tested the shares in Kubernetes master and worker nodes. In the second part, we have deployed Kubernetes – Setup Dynamic NFS Storage Provisioning and tested by creating the new PVC.