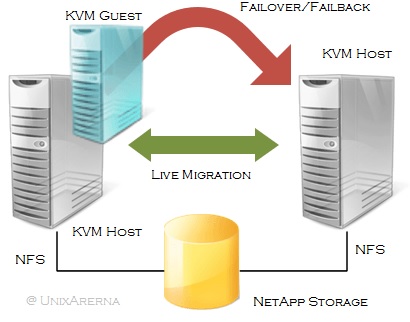

If you have followed the KVM article series in UnixArena , you might have read the article which talks about the KVM guest live migration. KVM supports the Guest Live migration (similar to VMware vMotion) but to provide high availability , you need need a cluster setup . (Like VMware HA). In this article ,we will configure the KVM guest as cluster resource with live migration support. If you move the KVM guest resource manually , cluster will perform the live migration and if any hardware failure or hypervisor failure happens on KVM host, guest will be started on available cluster node (with minimal downtime). I will be using the existing KVM and redhat cluster setup to demonstrate this.

- KVM Hyper-visor – RHEL 7.2

- Redhat cluster Nodes – UA-HA & UA-HA2

- Shared storage – NFS (As a alternative , you can also use GFS2 )

- KVM guest – UAKVM2

1. Login to one of the cluster node and halt the KVM guest.

[root@UA-HA ~]# virsh shutdown UAKVM2 [root@UA-HA ~]# virsh list --all Id Name State ---------------------------------------------------- - UAKVM2 shut off [root@UA-HA ~]#

2.Copy the Guest domain configuration file (XML) to NFS path.

[root@UA-HA qemu_config]# cd /etc/libvirt/qemu/ [root@UA-HA qemu]# ls -lrt total 8 drwx------. 3 root root 40 Dec 14 09:13 networks drwxr-xr-x. 2 root root 6 Dec 16 16:16 autostart -rw------- 1 root root 3676 Dec 23 02:52 UAKVM2.xml [root@UA-HA qemu]# [root@UA-HA qemu]# cp UAKVM2.xml /kvmpool/qemu_config [root@UA-HA qemu]# ls -lrt /kvmpool/qemu_config total 4 -rw------- 1 root root 3676 Dec 23 08:14 UAKVM2.xml [root@UA-HA qemu]#

3. Un-define the KVM virtual guest. (To configure as cluster resource)

[root@UA-HA qemu]# virsh undefine UAKVM2 Domain UAKVM2 has been undefined [root@UA-HA qemu]# virsh list --all Id Name State ---------------------------------------------------- [root@UA-HA qemu]#

4. Check the pacemaker cluster status.

[root@UA-HA ~]# pcs status

Cluster name: UABLR

Last updated: Mon Dec 28 22:44:59 2015 Last change: Mon Dec 28 21:16:56 2015 by root via crm_resource on UA-HA2

Stack: corosync

Current DC: UA-HA2 (version 1.1.13-10.el7-44eb2dd) - partition with quorum

2 nodes and 4 resources configured

Online: [ UA-HA UA-HA2 ]

Full list of resources:

Resource Group: WEBRG1

ClusterIP (ocf::heartbeat:IPaddr2): Started UA-HA2

vgres (ocf::heartbeat:LVM): Started UA-HA2

webvolfs (ocf::heartbeat:Filesystem): Started UA-HA2

webres (ocf::heartbeat:apache): Started UA-HA2

PCSD Status:

UA-HA: Online

UA-HA2: Online

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

[root@UA-HA ~]#

5. To manage the KVM guest, you need to use resource agent called “VirtualDomain”. Let’s create a new virtual domain using the UAKVM2.xml file where we have stored in /kvmpool/qemu_config.

[root@UA-HA ~]# pcs resource create UAKVM2_res VirtualDomain hypervisor="qemu:///system" config="/kvmpool/qemu_config/UAKVM2.xml" migration_transport=ssh op start timeout="120s" op stop timeout="120s" op monitor timeout="30" interval="10" meta allow-migrate="true" priority="100" op migrate_from interval="0" timeout="120s" op migrate_to interval="0" timeout="120" --group UAKVM2 [root@UA-HA ~]#

6. Check the cluster status.

[root@UA-HA ~]# pcs status

Cluster name: UABLR

Last updated: Mon Dec 28 22:51:36 2015 Last change: Mon Dec 28 22:51:36 2015 by root via crm_resource on UA-HA

Stack: corosync

Current DC: UA-HA2 (version 1.1.13-10.el7-44eb2dd) - partition with quorum

2 nodes and 5 resources configured

Online: [ UA-HA UA-HA2 ]

Full list of resources:

Resource Group: WEBRG1

ClusterIP (ocf::heartbeat:IPaddr2): Started UA-HA2

vgres (ocf::heartbeat:LVM): Started UA-HA2

webvolfs (ocf::heartbeat:Filesystem): Started UA-HA2

webres (ocf::heartbeat:apache): Started UA-HA2

Resource Group: UAKVM2

UAKVM2_res (ocf::heartbeat:VirtualDomain): Started UA-HA

PCSD Status:

UA-HA: Online

UA-HA2: Online

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

[root@UA-HA ~]#

7. KVM guest “UAKVM2” must be created and started automatically. Check the running VM using following command.

[root@UA-HA ~]# virsh list Id Name State ---------------------------------------------------- 2 UAKVM2 running [root@UA-HA ~]#

8. Pacemaker also support the live KVM guest migration. To migrate the KVM guest to other KVM host on fly, use the following command.

[root@UA-HA ~]# pcs resource move UAKVM2 UA-HA2 [root@UA-HA ~]#

In the above command,

UAKVM2 refers the Resource group name & UA-HA2 refers the cluster node name

9. Check the cluster status.

[root@UA-HA ~]# pcs status

Cluster name: UABLR

Last updated: Mon Dec 28 22:54:51 2015 Last change: Mon Dec 28 22:54:38 2015 by root via crm_resource on UA-HA

Stack: corosync

Current DC: UA-HA2 (version 1.1.13-10.el7-44eb2dd) - partition with quorum

2 nodes and 5 resources configured

Online: [ UA-HA UA-HA2 ]

Full list of resources:

Resource Group: WEBRG1

ClusterIP (ocf::heartbeat:IPaddr2): Started UA-HA2

vgres (ocf::heartbeat:LVM): Started UA-HA2

webvolfs (ocf::heartbeat:Filesystem): Started UA-HA2

webres (ocf::heartbeat:apache): Started UA-HA2

Resource Group: UAKVM2

UAKVM2_res (ocf::heartbeat:VirtualDomain): Started UA-HA2

PCSD Status:

UA-HA: Online

UA-HA2: Online

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

[root@UA-HA ~]#

10. List the VM using virsh command. You can see that VM is moved from UA-HA to UA-HA2.

[root@UA-HA ~]# virsh list --all Id Name State ---------------------------------------------------- [root@UA-HA ~]# ssh UA-HA2 virsh list Id Name State ---------------------------------------------------- 2 UAKVM2 running [root@UA-HA ~]#

During this migration , you will not even notice a single packet drop. That’s really cool.

Hope this article is informative to you . Share it ! Comment it !! Be Sociable !!!

John says

when i’m trying to run pcs resource create command it coming with an error,

error: When using ‘op’ you must specify an operation name and at least one option.

Please tell me how to fix it.