NetApp Clustered data ONTAP consists three type of vServers, which is helping in managing the node, cluster and data access to the clients.

- Node Vserver – Responsible to Manage the nodes. It automatically creates when the node joins the cluster.

- Admin Vserver – Responsible to Manage the entire cluster. It automatically creates during the cluster setup.

- Data Vserver – cluster administrator must create data Vservers and add volumes to these Vservers to facilitate data access from the cluster. A cluster must have at least one data Vserver to serve data to its clients.

A Data virtual storage server (Vserver) contains data volumes and one or more LIFs through which it serves data to the clients. Data vServer can contain the Flex Volume or Infinite volume. The data vServer securely isolates the shared virtualized data storage and network, and appears as a single dedicated server to its clients. Each Vserver has a separate administrator authentication domain and can be managed independently by a Vserver administrator. A cluster can have one or more Vservers with FlexVol volumes and Vservers with Infinite Volumes.

Vserver:

1.Login to the cluster LIF and check the existing Vserver.

NetUA::> vserver show

Admin Root Name Name

Vserver Type State Volume Aggregate Service Mapping

----------- ------- --------- ---------- ---------- ------- -------

NetUA admin - - - - -

NetUA-01 node - - - - -

NetUA-02 node - - - - -

3 entries were displayed.

NetUA::>

The existing Vservers are created once you configure the cluster. We need to configure the data Vserver for clients.

2.Check the existing volumes on the cluster.

NetUA::> volume show Vserver Volume Aggregate State Type Size Available Used% --------- ------------ ------------ ---------- ---- ---------- ---------- ----- NetUA-01 vol0 aggr0_01 online RW 851.5MB 421.8MB 50% NetUA-02 vol0 aggr0_02 online RW 851.5MB 421.0MB 50% 2 entries were displayed. NetUA::>

3. Check the available aggregates on the cluster.

NetUA::> storage aggregate show

Aggregate Size Available Used% State #Vols Nodes RAID Status

--------- -------- --------- ----- ------- ------ ---------------- ------------

NetUA01_aggr1

4.39GB 4.39GB 0% online 0 NetUA-01 raid_dp,

normal

aggr0_01 900MB 43.54MB 95% online 1 NetUA-01 raid_dp,

normal

aggr0_02 900MB 43.54MB 95% online 1 NetUA-02 raid_dp,

normal

3 entries were displayed.

NetUA::>

4.Create a data Vserver named ua_vs1 and provide the root volume name as “ua_vs1_root”.

NetUA::> vserver create -vserver ua_vs1 -rootvolume ua_vs1_root -aggregate NetUA01_aggr1 -ns-switch file -rootvolume-security-style unix [Job 103] Job succeeded: Vserver creation completed

5.List the Vservers again.

NetUA::> vserver show

Admin Root Name Name

Vserver Type State Volume Aggregate Service Mapping

----------- ------- --------- ---------- ---------- ------- -------

NetUA admin - - - - -

NetUA-01 node - - - - -

NetUA-02 node - - - - -

ua_vs1 data running ua_vs1_ NetUA01_ file file

root aggr1

4 entries were displayed.

NetUA::>

We can see that new data Vserver has been created successfully and aggregate “NetUA01_aggr1” has been mapped to it.

6. List the volumes again.

NetUA::> vol show

(volume show)

Vserver Volume Aggregate State Type Size Available Used%

--------- ------------ ------------ ---------- ---- ---------- ---------- -----

NetUA-01 vol0 aggr0_01 online RW 851.5MB 420.5MB 50%

NetUA-02 vol0 aggr0_02 online RW 851.5MB 420.4MB 50%

ua_vs1 ua_vs1_root NetUA01_aggr1

online RW 20MB 18.89MB 5%

3 entries were displayed.

NetUA::>

You can see that ua_vs1_root volume has been created with size of 20MB. This is Data SVM ua_vs1’s root filesystem.

7. Check the vServer properties.

NetUA::> vserver show -vserver ua_vs1

Vserver: ua_vs1

Vserver Type: data

Vserver UUID: d1ece3f0-9b76-11e5-b3cd-123478563412

Root Volume: ua_vs1_root

Aggregate: NetUA01_aggr1

Name Service Switch: file

Name Mapping Switch: file

NIS Domain: -

Root Volume Security Style: unix

LDAP Client: -

Default Volume Language Code: C.UTF-8

Snapshot Policy: default

Comment:

Antivirus On-Access Policy: default

Quota Policy: default

List of Aggregates Assigned: -

Limit on Maximum Number of Volumes allowed: unlimited

Vserver Admin State: running

Allowed Protocols: nfs, cifs, fcp, iscsi, ndmp

Disallowed Protocols: -

Is Vserver with Infinite Volume: false

QoS Policy Group: -

NetUA::>

If you just want to allow the NFS protocol for this Vserver, you can modify using the following command.

NetUA::> vserver modify -vserver ua_vs1 -allowed-protocols nfs

NetUA::> vserver show -vserver ua_vs1

Vserver: ua_vs1

Vserver Type: data

Vserver UUID: d1ece3f0-9b76-11e5-b3cd-123478563412

Root Volume: ua_vs1_root

Aggregate: NetUA01_aggr1

Name Service Switch: file

Name Mapping Switch: file

NIS Domain: -

Root Volume Security Style: unix

LDAP Client: -

Default Volume Language Code: C.UTF-8

Snapshot Policy: default

Comment:

Antivirus On-Access Policy: default

Quota Policy: default

List of Aggregates Assigned: -

Limit on Maximum Number of Volumes allowed: unlimited

Vserver Admin State: running

Allowed Protocols: nfs

Disallowed Protocols: cifs, fcp, iscsi, ndmp

Is Vserver with Infinite Volume: false

QoS Policy Group: -

NetUA::>

8. Check the junction path of the root volume.

NetUA::> volume show -vserver ua_vs1 -volume ua_vs1_root -fields junction-path vserver volume junction-path ------- ----------- ------------- ua_vs1 ua_vs1_root / NetUA::>

9. How to access this data Vserver ? To access the SVM (data Vserver), you need to create the data LIF . Let’s create the NAS data LIF for SVM ua_vs1 . The NFS & CIFS clients will use this IP to access the shares.

NetUA::> net int create -vserver ua_vs1 -lif uadata1 -role data -home-node NetUA-01 -home-port e0c -address 192.168.0.123 -netmask 255.255.255.0 (network interface create) NetUA::>

10. Review the newly created data LIF for “ua_vs1” SVM.

NetUA::> net int show

(network interface show)

Logical Status Network Current Current Is

Vserver Interface Admin/Oper Address/Mask Node Port Home

----------- ---------- ---------- ------------------ ------------- ------- ----

NetUA

cluster_mgmt up/up 192.168.0.101/24 NetUA-01 e0f false

NetUA-01

clus1 up/up 169.254.81.224/16 NetUA-01 e0a true

clus2 up/up 169.254.220.127/16 NetUA-01 e0b true

mgmt1 up/up 192.168.0.91/24 NetUA-01 e0f true

NetUA-02

clus1 up/up 169.254.124.94/16 NetUA-02 e0a true

clus2 up/up 169.254.244.74/16 NetUA-02 e0b true

mgmt1 up/up 192.168.0.92/24 NetUA-02 e0f true

ua_vs1

uadata1 up/up 192.168.0.123/24 NetUA-01 e0c true

8 entries were displayed.

NetUA::>

11.To see the detailed information of LIF, use the following command.

NetUA::> net int show -vserver ua_vs1 -lif uadata1

(network interface show)

Vserver Name: ua_vs1

Logical Interface Name: uadata1

Role: data

Data Protocol: nfs, cifs, fcache

Home Node: NetUA-01

Home Port: e0c

Current Node: NetUA-01

Current Port: e0c

Operational Status: up

Extended Status: -

Is Home: true

Network Address: 192.168.0.123

Netmask: 255.255.255.0

Bits in the Netmask: 24

IPv4 Link Local: -

Routing Group Name: d192.168.0.0/24

Administrative Status: up

Failover Policy: nextavail

Firewall Policy: data

Auto Revert: false

Fully Qualified DNS Zone Name: none

DNS Query Listen Enable: false

Failover Group Name: system-defined

FCP WWPN: -

Address family: ipv4

Comment: -

NetUA::>

12. NFS and CIFS clients might be in the other network than the DATA LIF network. So you might require to configure the default router for data LIF to reach the NFS & CIFS clients. Review the automatically created routing groups.

NetUA::> network routing-groups show

Routing

Vserver Group Subnet Role Metric

--------- --------- --------------- ------------ -------

NetUA

c192.168.0.0/24

192.168.0.0/24 cluster-mgmt 20

NetUA-01

c169.254.0.0/16

169.254.0.0/16 cluster 30

n192.168.0.0/24

192.168.0.0/24 node-mgmt 10

NetUA-02

c169.254.0.0/16

169.254.0.0/16 cluster 30

n192.168.0.0/24

192.168.0.0/24 node-mgmt 10

ua_vs1

d192.168.0.0/24

192.168.0.0/24 data 20

6 entries were displayed.

NetUA::>

13.View the static routes that were automatically created for you within their respective routing groups.

NetUA::> network routing-groups route show

Routing

Vserver Group Destination Gateway Metric

--------- --------- --------------- --------------- ------

NetUA

c192.168.0.0/24

0.0.0.0/0 192.168.0.1 20

NetUA-01

n192.168.0.0/24

0.0.0.0/0 192.168.0.1 10

NetUA-02

n192.168.0.0/24

0.0.0.0/0 192.168.0.1 10

3 entries were displayed.

NetUA::>

14.Create a static route for the routing group that was automatically created when you created LIF “uadata1”.

NetUA::> net routing-groups route create -vserver ua_vs1 -routing-group d192.168.0.0/24 -destination 0.0.0.0/0 -gateway 192.168.0.1 (network routing-groups route create) NetUA::>

15. Review the static route of ua_vs1.

NetUA::> network routing-groups route show

Routing

Vserver Group Destination Gateway Metric

--------- --------- --------------- --------------- ------

NetUA

c192.168.0.0/24

0.0.0.0/0 192.168.0.1 20

NetUA-01

n192.168.0.0/24

0.0.0.0/0 192.168.0.1 10

NetUA-02

n192.168.0.0/24

0.0.0.0/0 192.168.0.1 10

ua_vs1

d192.168.0.0/24

0.0.0.0/0 192.168.0.1 20

4 entries were displayed.

NetUA::>

16. The LIF has been created on NetUA-01 . What will happen if NetUA-01 fails ? . By default , LIF will be assinged to default failover groups.

Here ua_vs1’s data LIF is hosted on NetUA-01 and it is the home node for that LIF.

NetUA::> net int show

(network interface show)

Logical Status Network Current Current Is

Vserver Interface Admin/Oper Address/Mask Node Port Home

----------- ---------- ---------- ------------------ ------------- ------- ----

NetUA

cluster_mgmt up/up 192.168.0.101/24 NetUA-01 e0f false

NetUA-01

clus1 up/up 169.254.81.224/16 NetUA-01 e0a true

clus2 up/up 169.254.220.127/16 NetUA-01 e0b true

mgmt1 up/up 192.168.0.91/24 NetUA-01 e0f true

NetUA-02

clus1 up/up 169.254.124.94/16 NetUA-02 e0a true

clus2 up/up 169.254.244.74/16 NetUA-02 e0b true

mgmt1 up/up 192.168.0.92/24 NetUA-02 e0f true

ua_vs1

uadata1 up/up 192.168.0.123/24 NetUA-01 e0c true

8 entries were displayed.

NetUA::>

17.Show the current LIF failover groups and view the targets defined for the data and management LIFs.

NetUA::> net int failover show

(network interface failover show)

Logical Home Failover Failover

Vserver Interface Node:Port Policy Group

-------- --------------- --------------------- --------------- ---------------

NetUA

cluster_mgmt NetUA-01:e0c nextavail system-defined

Failover Targets: NetUA-01:e0c, NetUA-01:e0d,

NetUA-01:e0e, NetUA-01:e0f,

NetUA-02:e0c, NetUA-02:e0d,

NetUA-02:e0e, NetUA-02:e0f

NetUA-01

clus1 NetUA-01:e0a nextavail system-defined

clus2 NetUA-01:e0b nextavail system-defined

mgmt1 NetUA-01:e0f nextavail system-defined

Failover Targets: NetUA-01:e0f

NetUA-02

clus1 NetUA-02:e0a nextavail system-defined

clus2 NetUA-02:e0b nextavail system-defined

mgmt1 NetUA-02:e0f nextavail system-defined

Failover Targets: NetUA-02:e0f

ua_vs1

uadata1 NetUA-01:e0c nextavail system-defined

Failover Targets: NetUA-01:e0c, NetUA-01:e0d,

NetUA-01:e0e, NetUA-02:e0c,

NetUA-02:e0d, NetUA-02:e0e

8 entries were displayed.

NetUA::>

As per the above output, ua_vs1 LIF can be failover to NetUA-01’s other NIC if ant failure happens to the current network interface and It will failover to NetUA-02 if node NetUA-01 is down.

Let’s do the manual failover for ua_vs1’s data LIF.

NetUA::> net int migrate -vserver ua_vs1 -lif uadata1 -dest-port e0c -dest-node NetUA-02

(network interface migrate)

NetUA::> net int show

(network interface show)

Logical Status Network Current Current Is

Vserver Interface Admin/Oper Address/Mask Node Port Home

----------- ---------- ---------- ------------------ ------------- ------- ----

NetUA

cluster_mgmt up/up 192.168.0.101/24 NetUA-01 e0f false

NetUA-01

clus1 up/up 169.254.81.224/16 NetUA-01 e0a true

clus2 up/up 169.254.220.127/16 NetUA-01 e0b true

mgmt1 up/up 192.168.0.91/24 NetUA-01 e0f true

NetUA-02

clus1 up/up 169.254.124.94/16 NetUA-02 e0a true

clus2 up/up 169.254.244.74/16 NetUA-02 e0b true

mgmt1 up/up 192.168.0.92/24 NetUA-02 e0f true

ua_vs1

uadata1 up/up 192.168.0.123/24 NetUA-02 e0c false

8 entries were displayed.

NetUA::>

Here you can see that LIF has been moved from NetUA-01 to NetUA-02. You can see the “Is Home” has been set to false for data LIF.

The failover will happen in fraction of seconds. So there won’t be any impact expected. The fail-back will happen based auto-revert option.

NetUA::> network interface show -vserver ua_vs1 -lif uadata1 -fields auto-revert vserver lif auto-revert ------- ------- ----------- ua_vs1 uadata1 false NetUA::>

You can modify the auto-revert flag using the following command. If Auto-revert is set to true, LIF will automatically fail-back to home node. (If the node back’s to online).

NetUA::> network interface modify -vserver ua_vs1 -lif uadata1 -auto-revert true NetUA::> network interface show -vserver ua_vs1 -lif uadata1 -fields auto-revert vserver lif auto-revert ------- ------- ----------- ua_vs1 uadata1 true NetUA::>

You can bring the LIF back to home node using the following command.

NetUA::> network interface revert -vserver ua_vs1 -lif uadata1

NetUA::> net int show

(network interface show)

Logical Status Network Current Current Is

Vserver Interface Admin/Oper Address/Mask Node Port Home

----------- ---------- ---------- ------------------ ------------- ------- ----

NetUA

cluster_mgmt up/up 192.168.0.101/24 NetUA-01 e0f false

NetUA-01

clus1 up/up 169.254.81.224/16 NetUA-01 e0a true

clus2 up/up 169.254.220.127/16 NetUA-01 e0b true

mgmt1 up/up 192.168.0.91/24 NetUA-01 e0f true

NetUA-02

clus1 up/up 169.254.124.94/16 NetUA-02 e0a true

clus2 up/up 169.254.244.74/16 NetUA-02 e0b true

mgmt1 up/up 192.168.0.92/24 NetUA-02 e0f true

ua_vs1

uadata1 up/up 192.168.0.123/24 NetUA-01 e0c true

8 entries were displayed.

NetUA::>

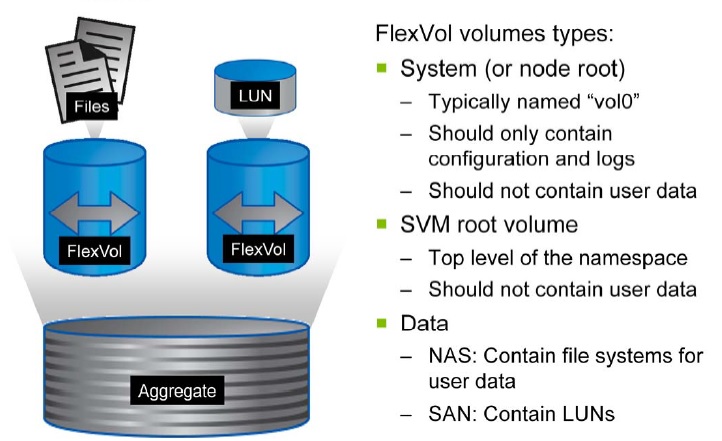

Hope this article is informative to you . In the upcoming article , we will wee more about the Netapp’s Volumes.(Flex volume, Infinite volume & Flex-cache volumes) .

Share it ! Comment it !! Be Sociable !!!